Personality Traits and Communication Skills Assessment Modeling

Interpersonal communication is the process of exchanging information, ideas, and emotions between two or more individuals. Effective interpersonal communication skills are essential in our global and multicultural society, where people from diverse backgrounds interact with each other. Communication skills include verbal and nonverbal cues, active listening, empathy, and emotional intelligence. Without these skills, misunderstandings can occur, leading to conflicts, and limiting productivity in personal and professional relationships. Therefore, it is essential to assess these skills and improve them to ensure successful communication.

Manual assessment of interpersonal communication skills by experts is usually time-consuming and expensive. Moreover, manual assessment can be subjective, leading to biased results. This research aims to automatically assess the skills by social signal processing (SSP). SSP refers to the process of analyzing and interpreting nonverbal social signals, such as facial expressions, body language, and tone of voice, to understand human behavior and emotions. SSP algorithms use machine learning and computer vision techniques to extract social cues and interpret them to make judgments about human behavior and emotional states.

SSP has several potential applications, including improving human-human and human-computer interaction. With the use of SSP algorithms, computers can sense, understand, and respond intelligently and naturally to human emotional feedback. This capability enables machines to respond to human emotions, which can improve user experience and engagement. SSP can also enhance human-human communication by improving social awareness, reducing misunderstandings, and enhancing empathy. Therefore, SSP has the potential to revolutionize communication and interaction in our global and multicultural society by enabling better understanding and connection between people of different backgrounds.

Related Publications

-

Beyond accuracy: Multimodal modeling of structured speaking skill indices in young adolescents

Computers and Education: Artificial Intelligence, Vol. 8, June 2025, 100386.

This study introduces a novel method for explainable speaking skill assessment that utilizes a unique dataset featuring video recordings of conversational interviews for high-stakes outcomes (i.e., admission to high schools and universities). Unlike traditional automated speaking assessments that prioritize accuracy at the expense of interpretability, our approach employs a new multimodal dataset that integrates acoustic and linguistic features, visual cues, turn-taking patterns, and expert-derived scores quantifying various speaking skill aspects observed during interviews with young adolescents. This dataset is distinguished by its open-ended question format, which allows for varied responses from interviewees, providing a rich basis for analysis. The experimental results demonstrate that fusing interpretable features, including prosody, action units, and turn-taking, significantly enhances the accuracy of spoken English skill prediction, achieving an overall accuracy of 83% when a machine learning model based on the light gradient boosting algorithm is used. Furthermore, this research underscores the significant influence of external factors, such as interviewer behavior and the interview setting, particularly on the coherence aspect of spoken English proficiency. This focus on an innovative dataset and interpretable assessment tools offers a more nuanced understanding of speaking skills in high-stakes contexts than that offered by previous studies. -

Influence of Personality Traits and Demographics on Rapport Recognition Using Adversarial Learning

Multimodal Technol. Interact. 2025, 9(3), 18.

The automatic recognition of user rapport at the dialogue level for multimodal dialogue systems (MDSs) is a critical component of effective dialogue system management. Both the dialogue systems and their evaluations need to be based on user expressions. Numerous studies have demonstrated that user personalities and demographic data such as age and gender significantly affect user expression. Neglecting users’ personalities and demographic data will result in less accurate user expression and rapport recognition. To the best of our knowledge, no existing studies have considered the effects of users’ personalities and demographic data on the automatic recognition of user rapport in MDSs. To analyze the influence of users’ personalities and demographic data on dialogue level user rapport recognition, we first used a Hazummi dataset which is an online dataset containing users’ personal information (personality, age, and gender information). Based on this dataset, we analyzed the relationship between user rapport in dialogue systems and users’ traits, finding that gender and age significantly influence the recognition of user rapport. These factors could potentially introduce biases into the model. To mitigate the impact of users’ traits, we introduced an adversarial-based model. Experimental results showed a significant improvement in user rapport recognition compared to models that do not account for users’ traits. To validate our multimodal modeling approach, we compared it to human perception and instruction-based Large Language Models (LLMs). The results showed that our model outperforms that of human and instruction-based LLM models. -

MAG-BERT-ARL for Fair Automated Video Interview Assessment.

IEEE Access, 2024.

Potential biases within automated video interview assessment algorithms may disadvantage specific demographics due to the collection of sensitive attributes, which are regulated by the General Data Protection Regulation (GDPR). To mitigate these fairness concerns, this research introduces MAG-BERT-ARL, an automated video interview assessment system that eliminates reliance on sensitive attributes. MAG-BERT-ARL integrates Multimodal Adaptation Gate and Bidirectional Encoder Representations from Transformers (MAG-BERT) model with the Adversarially Reweighted Learning (ARL). This integration aims to improve the performance of underrepresented groups by promoting Rawlsian Max-Min Fairness. Through experiments on the Educational Testing Service (ETS) and First Impressions (FI) datasets, the proposed method demonstrates its effectiveness in optimizing model performance (increasing Pearson correlation coefficient up to 0.17 in the FI dataset and precision up to 0.39 in the ETS dataset) and fairness (reducing equal accuracy up to 0.11 in the ETS dataset). The findings underscore the significance of integrating fairness-enhancing techniques like ARL and highlight the impact of incorporating nonverbal cues on hiring decisions. -

A Ranking Model for Evaluation of Conversation Partners Based on Rapport Levels.

IEEE Access, 2023.

Our proposed ranking model ranks conversation partners based on self-reported rapport levels for each participant. The model is important for tasks that recommend interaction partners based on user rapport built in past interactions, such as matchmaking between a student and a teacher in one-to-one online language classes. To rank conversation partners, we can use a regression model that predicts rapport ratings. It is, however, challenging to learn the mapping from the participants' behavior to their associated rapport ratings because a subjective scale for rapport ratings may vary across different participants. Hence, we propose a ranking model trained via preference learning (PL). The model avoids the subjective scale bias because the model is trained to predict ordinal relations between two conversation partners based on rapport ratings reported by the same participant. The input of the model is multimodal (acoustic and linguistic) features extracted from two participants' behaviors in an interaction. Since there is no publicly available dataset for validating the ranking model, we created a new dataset composed of online dyadic (person-to-person) interactions between a participant and several different conversation partners. We compare the ranking model trained via preference learning with the regression model by using evaluation metrics for the ranking. The experimental results show that preference learning is a more suitable approach for ranking conversation partners. Furthermore, we investigate the effect of each modality and the different stages of rapport development on the ranking performance. -

Inter-person Intra-modality Attention Based Model for Dyadic Interaction Engagement Prediction.

The 25th HCI International Conference, HCII 2023, Copenhagen, Denmark, July 23–28, 2023.

With the rapid development of artificial agents, more researchers have explored the importance of user engagement level prediction. Real-time user engagement level prediction assists the agent in properly adjusting its policy for the interaction. However, the existing engagement modeling lacks the element of interpersonal synchrony, a temporal behavior alignment closely related to the engagement level. Part of this is because the synchrony phenomenon is complex and hard to delimit. With this background, we aim to develop a model suitable for temporal interpersonal features with the help of the modern data-driven machine learning method. Based on previous studies, we select multiple non-verbal modalities of dyadic interactions as predictive features and design a multi-stream attention model to capture the interpersonal temporal relationship of each modality. Furthermore, we experiment with two additional embedding schemas according to the synchrony definitions in psychology. Finally, we compare our model with a conventional structure that emphasizes the multimodal features within an individual. Our experiments showed the effectiveness of the intra-modal inter-person design in engagement prediction. However, the attempt to manipulate the embeddings failed to improve the performance. In the end, we discuss the experiment result and elaborate on the limitations of our work. -

Investigating the Effect of Linguistic Features on Personality and Job Performance Predictions.

The 25th HCI International Conference, HCII 2023, Copenhagen, Denmark, July 23–28, 2023.

Personality traits are known to have a high correlation with job performance. On the other hand, there is a strong relationship between language and personality. In this paper, we presented a neural network model for inferring personality and hirability. Our model was trained only from linguistic features but achieved good results by incorporating transfer learning and multi-task learning techniques. The model improved the F1 score 5.6% point on the Hiring Recommendation label compared to previous work. The effect of different Automatic Speech Recognition systems on the performance of the models was also shown and discussed. Lastly, our analysis suggested that the model makes better judgments about hirability scores when the personality traits information is not absent. -

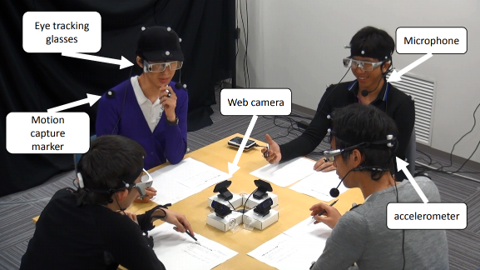

Personality Trait Estimation in Group Discussions using Multimodal Analysis and Speaker Embedding.

Journal on Multimodal User Interfaces, 2023.

The automatic estimation of personality traits is essential for many human-computer interface (HCI) applications. This paper focused on improving Big Five personality trait estimation in group discussions via multimodal analysis and transfer learning with the state-of-the-art speaker individuality feature, namely, the identity vector (i-vector) speaker embedding. The experiments were carried out by investigating the effective and robust multimodal features for estimation with two group discussion datasets, i.e., the Multimodal Task-Oriented Group Discussion (MATRICS) (in Japanese) and Emergent Leadership (ELEA) (in European languages) corpora. Subsequently, the evaluation was conducted by using leave-one-person-out cross-validation (LOPCV) and ablation tests to compare the effectiveness of each modality. The overall results showed that the speaker-dependent features, e.g., the i-vector, effectively improved the prediction accuracy of Big Five personality trait estimation. In addition, the experimental results showed that audio-related features were the most prominent features in both corpora. -

Multimodal Analysis for Communication Skill and Self-Efficacy Level Estimation in Job Interview Scenario.

The 21st International Conference on Mobile and Ubiquitous Multimedia (ACM MUM 2022), Lisbon, Portugal, 27--30 November 2022.

An interview for a job recruiting process requires applicants to demonstrate their communication skills. Interviewees sometimes become nervous about the interview because interviewees themselves do not know their assessed score. This study investigates the relationship between the communication skill (CS) and the self-efficacy level (SE) of interviewees through multimodal modeling. We also clarify the difference between effective features in the prediction of CS and SE labels. For this purpose, we collect a novel multimodal job interview data corpus by using a job interview agent system where users experience the interview using a virtual reality head-mounted display (VR-HMD). The data corpus includes annotations of CS by third-party experts and SE annotations by the interviewees. The data corpus also includes various kinds of multimodal data, including audio, biological (i.e., physiological), gaze, and language data. We present two types of regression models, linear regression and sequential-based regression models, to predict CS, SE, and the gap (GA) between skill and self-efficacy. Finally, we report that the model with acoustic, gaze, and linguistic features has the best regression accuracy in CS prediction (correlation coefficient r = 0.637). Furthermore, the regression model with biological features achieves the best accuracy in SE prediction (r = 0.330). -

Task-independent Recognition of Communication Skills in Group Interaction Using Time-series Modeling.

ACM Transactions on Multimedia Computing Communications and Applications, vol. 17, no. 4, pp. 122:1-122:27, 2021.

Case studies of group discussions are considered an effective way to assess communication skills (CS). This method can help researchers evaluate participants’ engagement with each other in a specific realistic context. In this article, multimodal analysis was performed to estimate CS indices using a three-task-type group discussion dataset, the MATRICS corpus. The current research investigated the effectiveness of engaging both static and time-series modeling, especially in task-independent settings. This investigation aimed to understand three main points: first, the effectiveness of time-series modeling compared to nonsequential modeling; second, multimodal analysis in a task-independent setting; and third, important differences to consider when dealing with task-dependent and task-independent settings, specifically in terms of modalities and prediction models. Several modalities were extracted (e.g., acoustics, speaking turns, linguistic-related movement, dialog tags, head motions, and face feature sets) for inferring the CS indices as a regression task. Three predictive models, including support vector regression (SVR), long short-term memory (LSTM), and an enhanced time-series model (an LSTM model with a combination of static and time-series features), were taken into account in this study. Our evaluation was conducted by using the R2 score in a cross-validation scheme. The experimental results suggested that time-series modeling can improve the performance of multimodal analysis significantly in the task-dependent setting (with the best R2 = 0.797 for the total CS index), with word2vec being the most prominent feature. Unfortunately, highly context-related features did not fit well with the task-independent setting. Thus, we propose an enhanced LSTM model for dealing with task-independent settings, and we successfully obtained better performance with the enhanced model than with the conventional SVR and LSTM models (the best R2 = 0.602 for the total CS index). In other words, our study shows that a particular time-series modeling can outperform traditional nonsequential modeling for automatically estimating the CS indices of a participant in a group discussion with regard to task dependency. -

Multimodal BigFive Personality Trait Analysis using Communication Skill Indices and Multiple Discussion Types Dataset.

Springer LNCS Social Computing and Social Media: Design, Human Behavior, and Analytics, Springer, vol. 11578, 2019.

This paper focuses on multimodal analysis in multiple discussion types dataset for estimating BigFive personality traits. The analysis was conducted to achieve two goals: First, clarifying the effectiveness of multimodal features and communication skill indices to predict the BigFive personality traits. Second, identifying the relationship among multimodal features, discussion type, and the BigFive personality traits. The MATRICS corpus, which contains of three discussion task types dataset, was utilized in this experiment. From this corpus, three sets of multimodal features (acoustic, head motion, and linguistic) and communication skill indices were extracted as the input for our binary classification system. The evaluation was conducted by using F1-score in 10-fold cross validation. The experimental results showed that the communication skill indices are important in estimating agreeableness trait. In addition, the scope and freedom of conversation affected the performance of personality traits estimator. The freer a discussion is, the better personality traits estimator can be obtained.